Meta desperately needs to fix the rankings in their store. Here's how to do it. (UPDATED!)

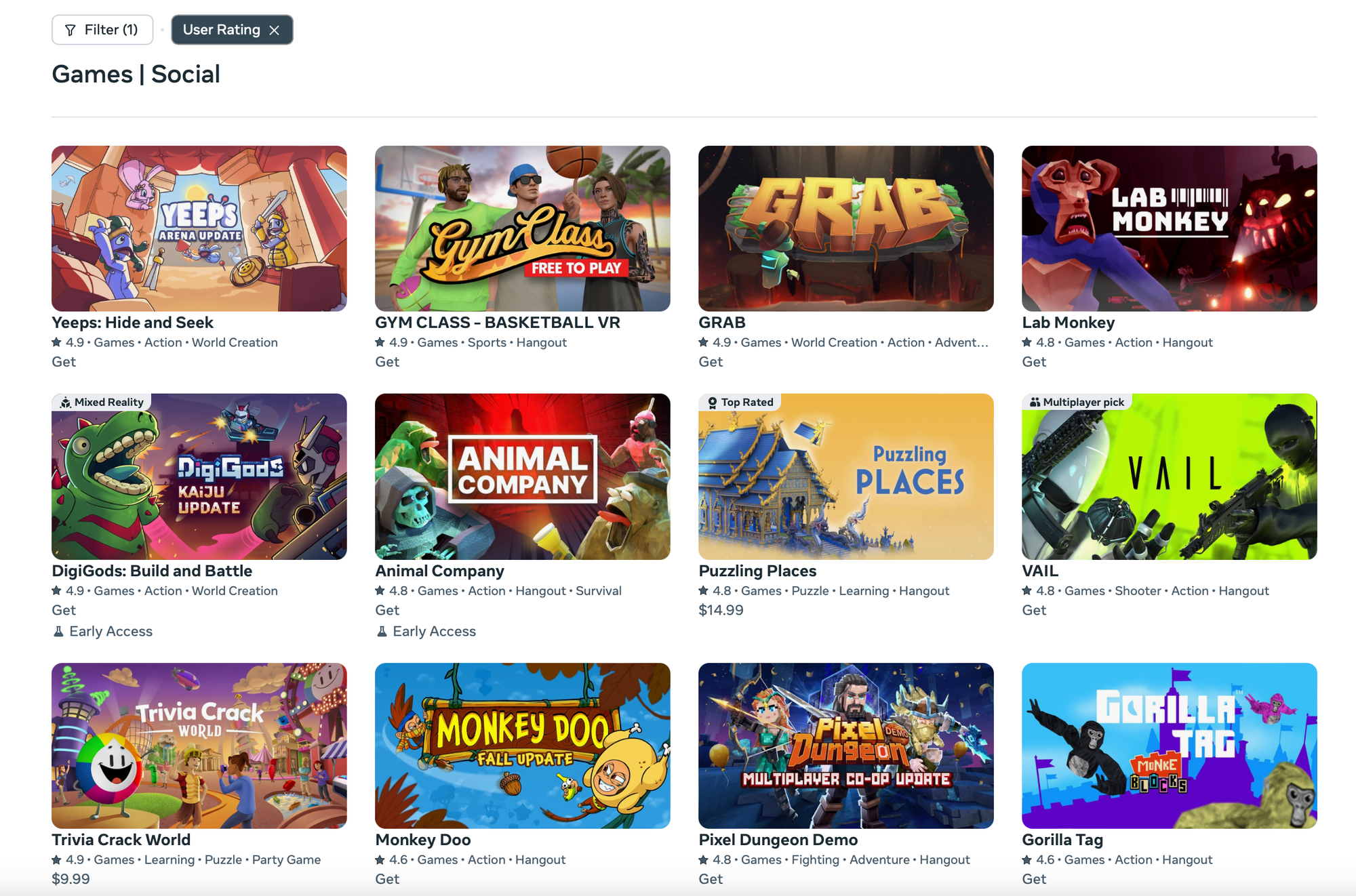

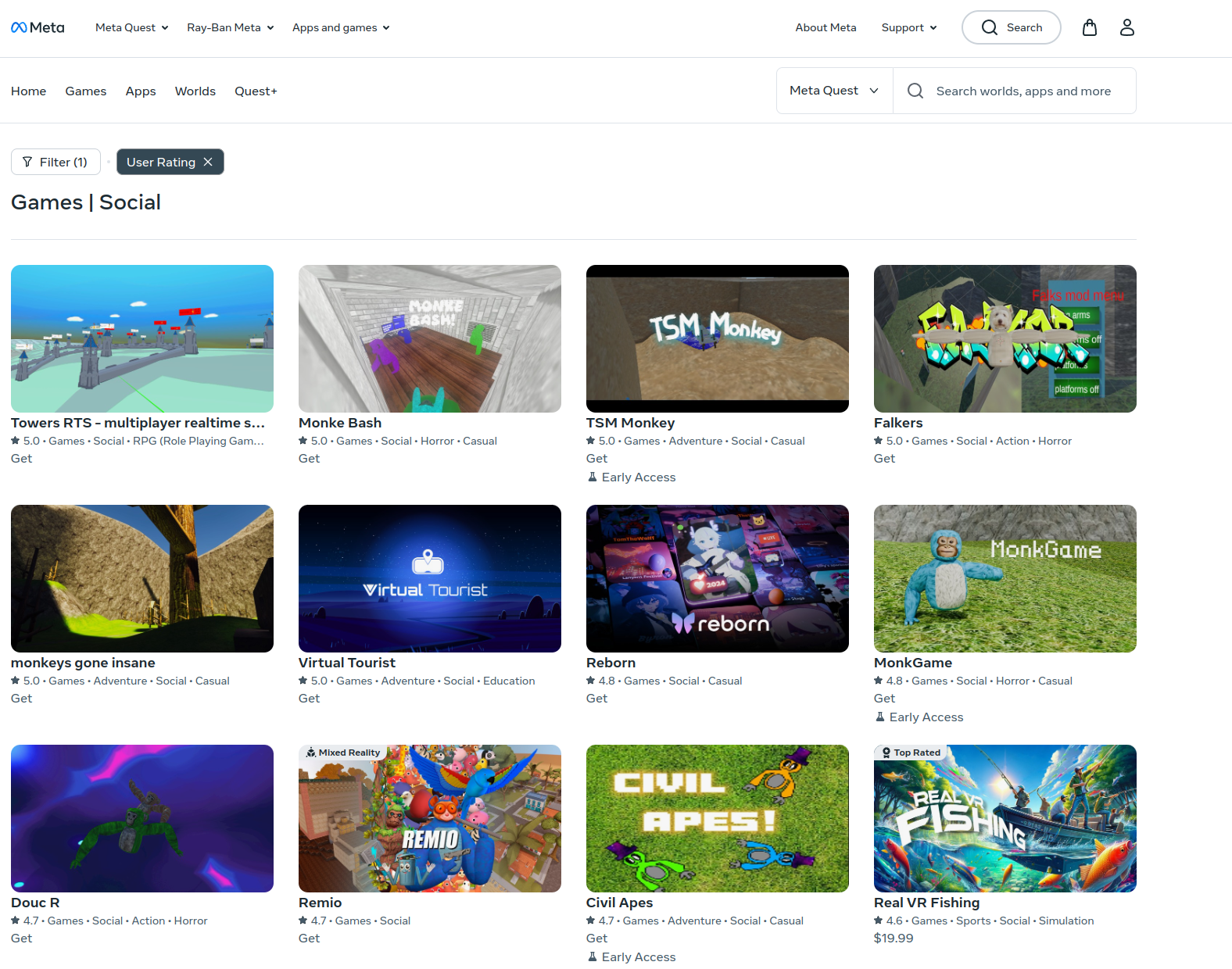

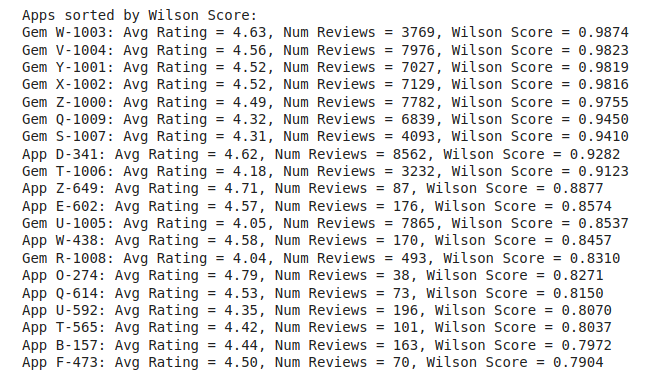

Update: I shared this article in a few places and then a few weeks later an engineer from Meta who I'll let be anonymous DMed me and told me to check out the rankings in the store. I did a quick category search and sorted by avg rating, and this is what I found.

It looks like Meta implemented a better ranking algorithm that takes the effects we pointed out in into consideration. It works much better now and I'm thrilled that Meta is taking our feedback seriously and acting on it.

Recently at Meta Connect, Andrew Bosworth went on stage and apologized to the Meta Quest developer community for how things have been over the last few years.

I appreciate the apology, and I want to help add energy to this “new Meta” by pointing out an issue that is hurting the platform and suggesting a fix.

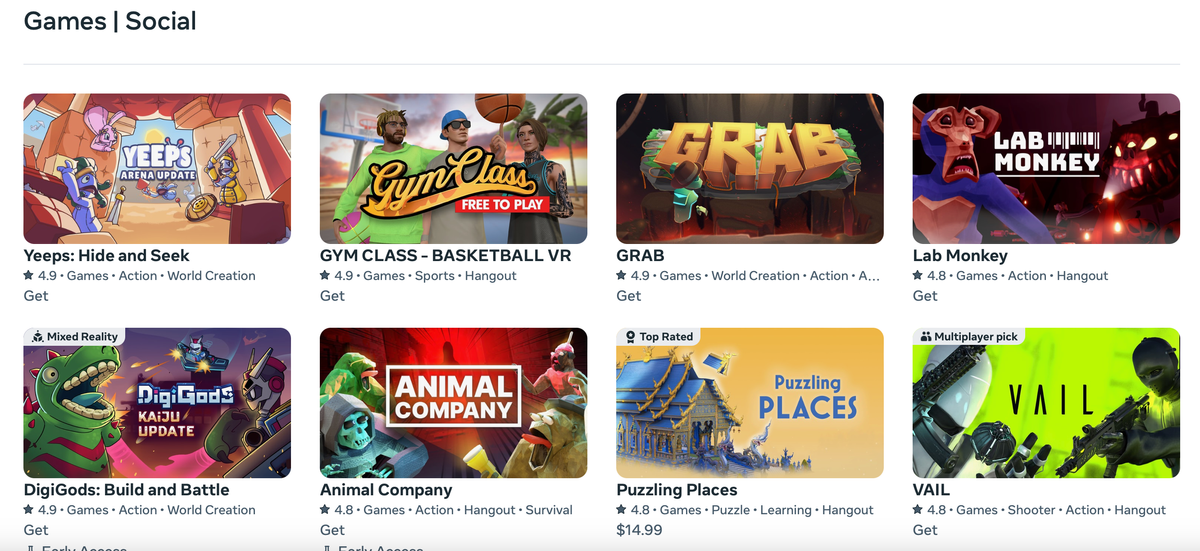

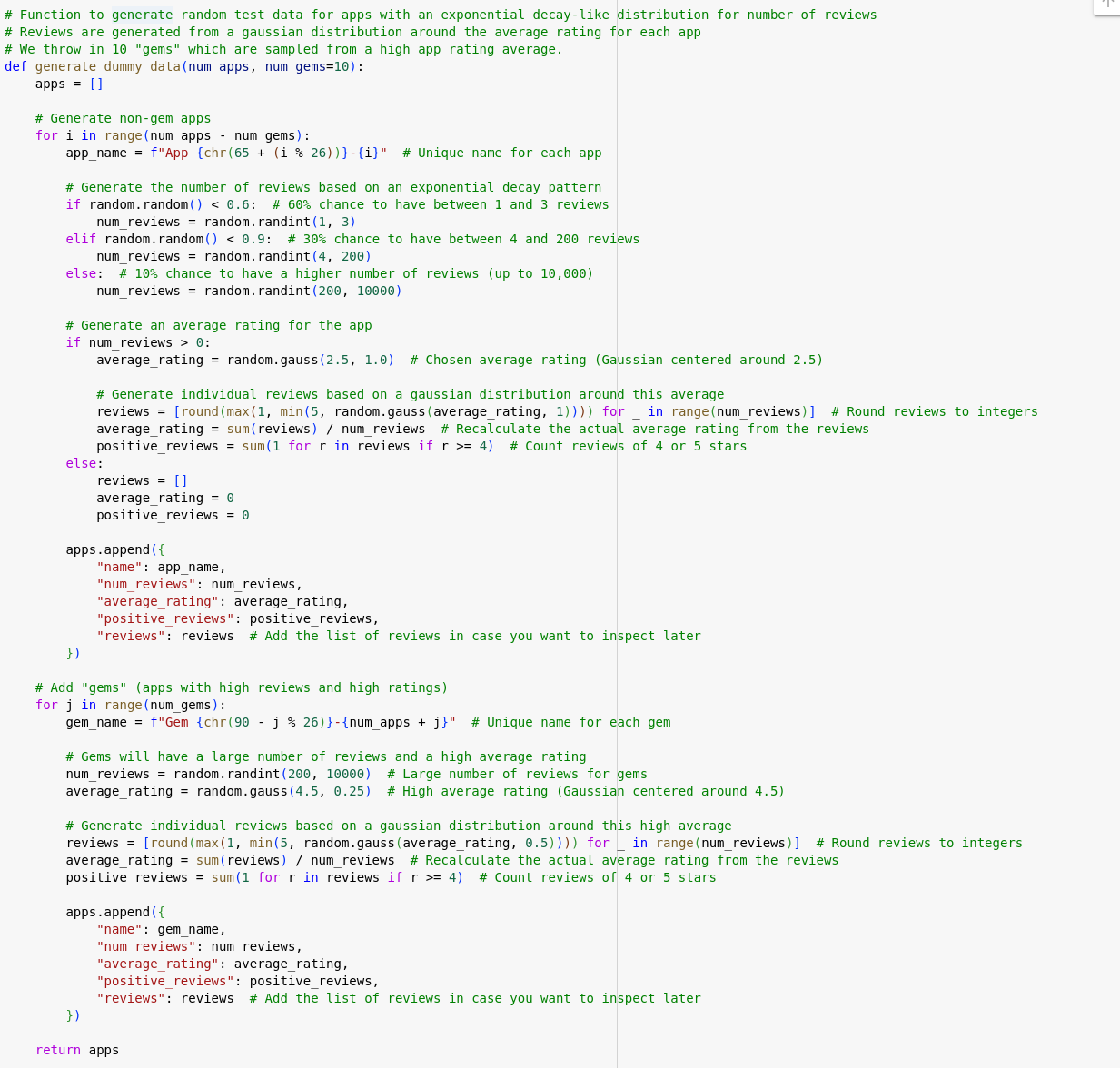

The quest community recently has faced a lot of issues with how results on the store are sorted. For example, if you go to the Games | Social category and sort by “rating” this is what you get:

Notice how really well known, beloved apps with thousands of reviews like VRChat, Gorilla Tag, Gym Class, and Yeeps are missing? The first game I’ve actually heard of in the list is Real VR Fishing. Great game, but hardly the top social VR game on the platform. What’s going on here?

Well, I dug deeper and figured out what seems to be the source of the issue. When you sort by user rating, the results are sorted by average user rating and there are a bunch of apps with a single 5 star review (giving them a 5 star average) that are drowning out the apps that actually have a real sample of reviews.

For the most part, the apps in the current top rankings are relatively new and haven’t been played or reviewed by many players. In my mind as a developer, these are not “good” apps to rank first. They might be good games and should be somewhere on the list, but ranking them above more established apps with larger number of reviews makes a bad user experience for the Quest Store user who is looking for new games to play and wants to find what other players like.

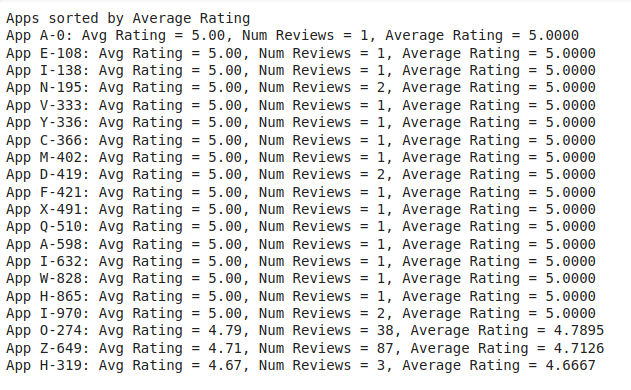

But what is Meta to do? How can they sort by rating without these apps dominating the top spots? Well, I’m a developer and I know how to use ChatGPT, so I spent 15 minutes and whipped together a simulated app store with review patterns that mimic the Quest store.

I created 1000 apps with ratings averaging 2.5 stars with a standard deviation of 1. For each app I generated a variable number of reviews from 1-5 stars and then re-calculated the averages based on the reviews. I then threw in 10 “gems” which are apps with much higher average ratings and higher number of reviews. These are the Gorilla Tags of our simulated app store.

When we rank by average rating, we get the same phenomenon:

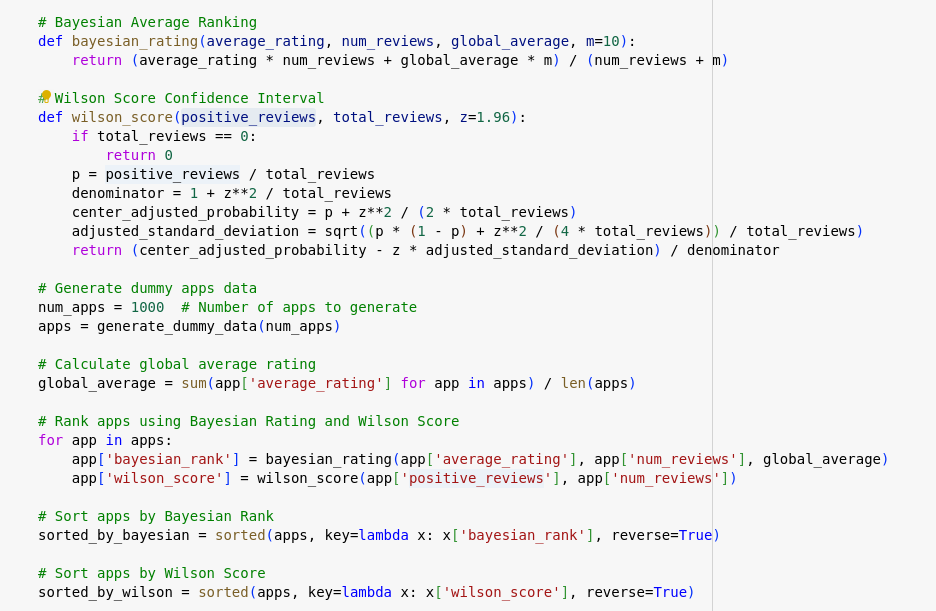

This kind of ranking problem actually common across the web. Sites like Reddit need to sort comments which have been posted at different times and have had a different amount of time to gather upvotes and downvotes. They use a variety of methods, but the two that are the best suited to solving our problem are Bayesian Averages and Wilson Score Confidence Intervals (what Reddit uses).

Let’s implement those two methods and then see how they do!

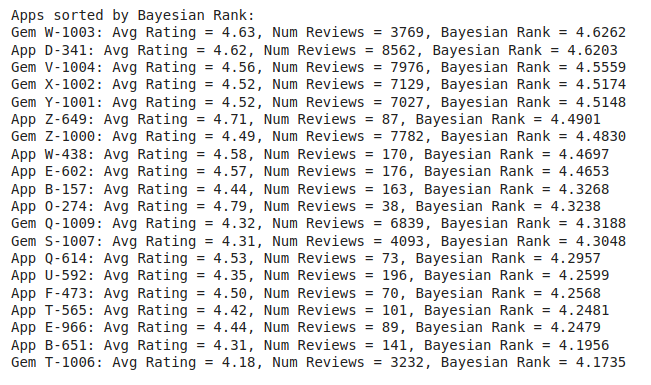

As expected, the two methods do a much better job of sorting the apps. We get all of the gems in the top 20 apps along with a bunch of other “good’ apps that have a lot of ratings with a high average. All the single-review apps are now properly sorted and can’t drown out the apps users are probably looking for.

Adding a ranking algorithm like this is a really easy backend change for Meta to make. (Writing this blog post actually took way longer than writing the simulation and ranking code.)

If they truly are listening to us now and working to improve the developer experience on Quest, I hope they’ll take this example and run with it to improve the app store ranking system ASAP. It will lead to better retention, better sales, and a overall better user experience.

Here's a link to my full code as a Colab Notebook if you want to check it out: